OpenAI Just Made Building AI Agents 10x Easier (And Faster)

OpenAI's new AgentKit makes building production-ready AI agents 10x faster. Learn what changed, real operator results, and how to start this week.

Here’s the thing about AI agents…

They’ve been promising to transform how we work for months now. But the reality? Most teams spent weeks wrangling fragmented tools, debugging mysterious failures, and watching their agents crash in production.

That changed this week.

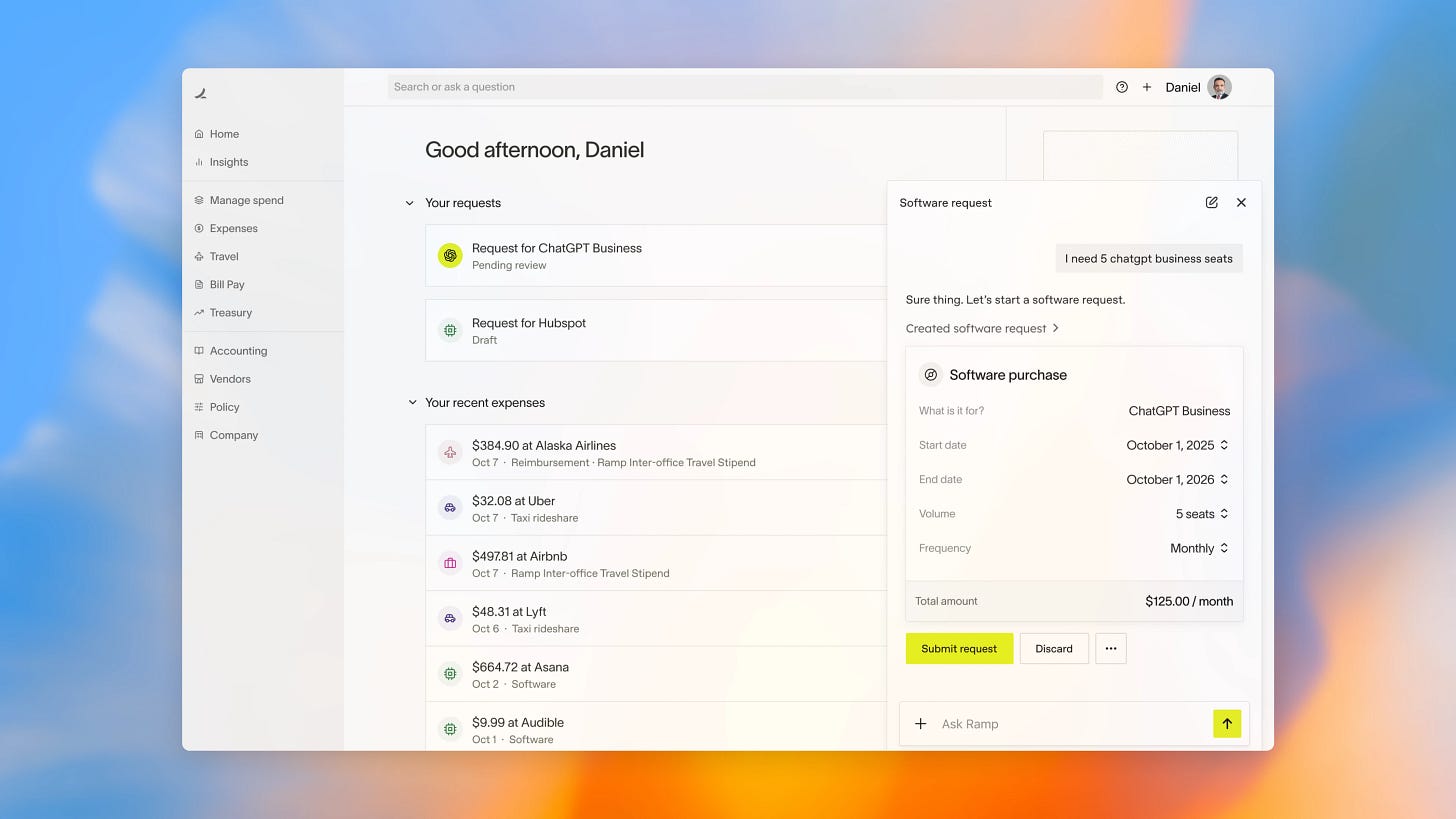

OpenAI just dropped AgentKit — and honestly, it’s the first time building production-ready AI agents feels… approachable. Companies like Ramp went from blank canvas to working buyer agent in hours instead of months, slashing iteration cycles by 70%. LY Corporation built a complete multi-agent workflow in under two hours.

If you’ve been waiting for the right moment to build AI agents into your business… this might be it.

Today’s Partner: Scriptbee.ai

Scriptbee.ai helps enterprises and startups become visible in AI Search. Think of it as SEO, but for the new world where people ask AI instead of Google.

The analytics show you exactly which queries trigger your brand, where you rank, and how to grow your share of AI-generated recommendations. No black box. Just clear visibility into the fastest-growing discovery channel in tech.

What AgentKit Actually Does (And Why It Matters)

Let’s cut through the hype.

AgentKit is a complete toolkit for building, deploying, and optimizing AI agents. But the real story isn’t the tools themselves — it’s what they replace.

Before AgentKit, building an agent meant:

Cobbling together orchestration frameworks

Writing custom connectors for your data

Manually reviewing thousands of trace logs to find failures

Weeks of frontend work before you could even test with users

Crossing your fingers every time you pushed to production

After AgentKit? Most of that goes away.

Agent Builder: Your Visual Canvas for Complex Workflows

Agent Builder provides a drag-and-drop interface for composing multi-agent workflows with version control, inline evaluation, and preview runs.

What this means for operators: You can now map out your agent’s logic visually. No more black boxes. Your product team, legal team, and engineers can all look at the same canvas and understand exactly what the agent will do.

Ramp’s team reports slashing iteration cycles by 70%, getting an agent live in two sprints rather than two quarters. That’s the difference between testing an idea this month versus next year.

ChatKit: Deploy Conversational Interfaces in Minutes

Here’s where it gets practical.

ChatKit lets you embed customisable chat-based agent experiences directly into your product, handling streaming responses, thread management, and thinking displays.

One operator at Canva put it perfectly: “We saved over two weeks building a support agent with ChatKit and integrated it in less than an hour”.

Two weeks → one hour. That math works.

Evals Platform: Finally Know If Your Agent Actually Works

This is the unglamorous part everyone avoids… until their agent hallucinates in front of a customer.

The new Evals capabilities include datasets for rapid test building, trace grading for end-to-end workflow assessments, automated prompt optimization, and support for third-party models.

Carlyle’s team reports cutting development time by over 50% and increasing agent accuracy by 30% using the evaluation platform.

Think about that: 30% more accurate agents, in half the time. That’s not incremental — that’s a different category of reliability.

The Guardrails You Didn’t Know You Needed

Guardrails provide an open-source, modular safety layer that helps protect agents against unintended or malicious behaviour, including PII masking, jailbreak detection, and other safeguards.

You know what’s more expensive than building guardrails? Explaining to your board why your agent leaked customer data.

The Apps SDK: Agents That Live Inside ChatGPT

While AgentKit helps you build agents for your own products, OpenAI also launched something else this week: Apps in ChatGPT, powered by the new Apps SDK, letting developers create conversational apps that reach over 800 million ChatGPT users.

Early partners include Booking.com, Canva, Coursera, Figma, Expedia, Spotify, and Zillow.

The interesting bit? The Apps SDK builds on the Model Context Protocol (MCP), making it an open standard that can run anywhere adopting this standard.

What this means: If you’re building B2C agent experiences, you can now distribute them where people already spend time. No need to convince users to download yet another app.

What This Changes (Practically Speaking)

Let’s be real about what just shifted.

For solo founders and small teams: You can now build and ship agent-powered features without hiring a specialist AI team. The visual tools and pre-built components lower the barrier dramatically.

For mid-sized companies: Your existing engineering team can move faster. ChatKit already powers use cases from internal knowledge assistants and onboarding guides to customer support and research agents. Pick one workflow that’s currently manual, build an agent, ship it this quarter.

For enterprises: The Connector Registry consolidates data sources across ChatGPT and the API into a single admin panel, working with pre-built connectors like Dropbox, Google Drive, SharePoint, and Microsoft Teams. Finally, governance that doesn’t require rebuilding everything.

The pattern I’m seeing: Teams that struggled for months to get one agent working are now shipping multiple agent workflows in weeks.

The Honest Challenges (Because Nothing’s Perfect)

A few things worth considering:

Learning curve still exists: Visual tools help, but you still need to understand agent architectures, evaluation metrics, and failure modes. AgentKit makes it easier, not automatic.

Costs can escalate: More agents mean more API calls. Monitor your usage carefully, especially during testing. One founder told me their eval runs alone cost $2,000 before they optimised.

Integration complexity: While ChatKit can be embedded into apps or websites and customised to match your theme, connecting your existing data sources and workflows still requires planning. It’s faster than before, but not instant.

What To Do This Week

If you’re considering AI agents (and you probably should be), here’s where to start:

Identify one workflow in your business that’s repetitive but requires some judgment. Customer support, lead qualification, data entry with validation — something that’s currently eating hours.

Test with Developer Mode — OpenAI’s documentation includes guidelines and example apps you can test using Developer Mode in ChatGPT. Try building a simple agent before committing resources.

Start with evaluation — Before building anything fancy, set up basic evals. Define what “good” looks like for your use case. The teams getting real value from agents aren’t the ones with the fanciest architecture — they’re the ones who can reliably measure if their agent is improving.

Budget for iteration — Plan for 3-4 rounds of testing before you ship. Every team I’ve talked to says their first version wasn’t good enough. The difference now is you can do those iterations in days instead of months.

The Bigger Picture

Here’s what matters beyond the tooling…

We’re moving from “can we build an AI agent?” to “how fast can we ship one that actually works?”

Since releasing the Responses API and Agents SDK in March, developers have built end-to-end agentic workflows for deep research and customer support, with Klarna handling two-thirds of all tickets with their support agent.

That’s not a demo. That’s production. At scale.

The companies winning next year won’t be the ones with the best AI strategy deck. They’ll be the ones who shipped three agent-powered workflows this quarter, learned what worked, and are shipping three more next quarter.

AgentKit just made that playbook available to everyone.

YC Startup Spotlight: Atla

If you’re building AI agents, you need to know about Atla (YC S23).

Here’s the problem they solve: Agent failures hide inside long traces and are difficult to spot at scale, with teams manually reviewing thousands of traces without clear signal.

What Atla does: Their LLM judge evaluates your agent step-by-step, uncovers error patterns across runs, and suggests specific fixes with real-time monitoring and automated error detection.

Why it matters: The gap between “my agent works in testing” and “my agent works in production” is where most projects die. Atla helps you find and fix critical failures in hours instead of days.

They’ve trained purpose-built evaluation models (Selene and Selene Mini) that have been downloaded 60,000+ times. If AgentKit is how you build agents, Atla is how you make sure they don’t break.

Worth checking out → atla-ai.com

One Question For You

What’s the one workflow in your business you’d trust an AI agent to handle tomorrow if you knew it would work reliably?

Hit reply and let me know. I’m genuinely curious what operators are thinking about here.

Let’s Keep This Going

Building AI agents is moving fast. Too fast to figure everything out alone.

If you’re navigating the shift to AI-powered operations — whether you’re building agents, evaluating tools, or just trying to figure out what matters — I’m here to help.

I work with founders, operators, and teams who are tired of hype and want practical guidance on what actually works. Real conversations about real challenges.

Book a call → topmate.io/chalkmeout

Or connect with me on LinkedIn → linkedin.com/in/chalkmeout

Always happy to chat about what you’re building.

— Narayanan